I wondered why many test automation scripts fail infrequently and daily? After a deep analysis with more than 20000+ tests, I found that it is not primarily to do with the tools or scripting techniques and neither with the build. So, what causes more failures?

The wrong selection of tests that are automated is the biggest pain today.

A “good candidate” for test automation is a test case that runs frequently, protects critical business paths, has predictable outcomes, and is stable across environments. Start with build-verification smoke tests, expand into sanity for business-critical flows, and only then grow toward minimal regression—measuring ROI and stability at every stage.

Key Takeaways

Place: immediately after the Answer Box.

-

Automate tests that run often and block releases (smoke first).

-

Prioritize business-critical sanity flows before long regression suites.

-

Don’t scale automation until it’s stable in CI with clear failure evidence.

Who benefits from automation?

Where to Start?

Start automating the tests that are manually tested against each build. Do you mean smoke tests first? Yes, the build verification tests. Let’s see how it makes a difference.

Assume a test case is about 20 steps with few verifications. Let’s say the manual testing effort takes 5 minutes to complete for a single iteration, and the test automation effort for the same test (using Selenium WebDriver) for the single browser (~chrome) may take about 120 to 180 minutes or more, including development, testing, integration, etc.

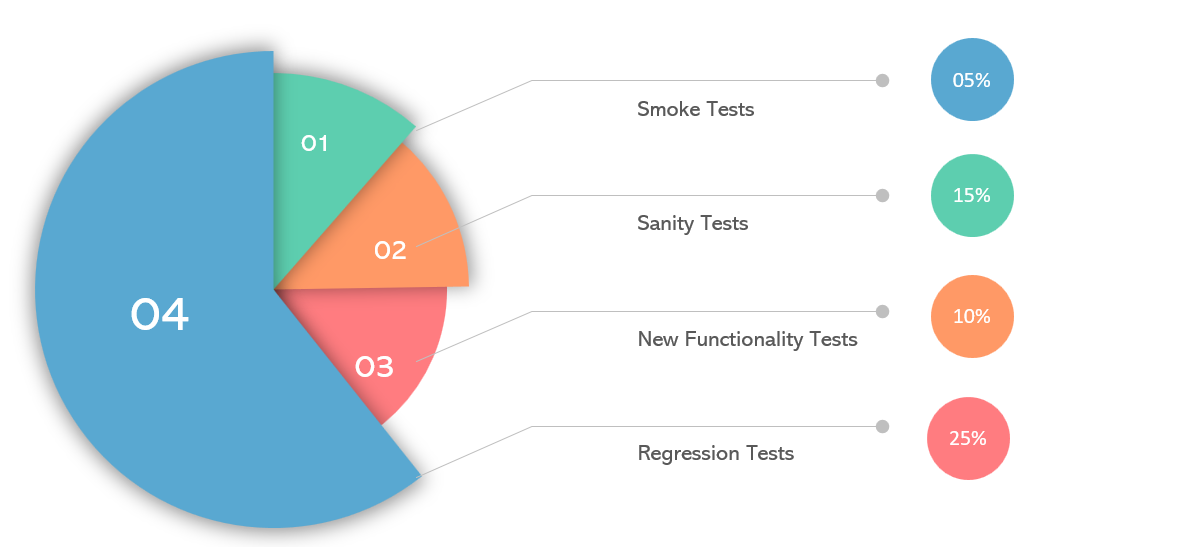

With that said, unless you run your automated test minimally 24 times to get the returns with the hope that the automated test does not break before you achieve your returns. Okay? Per industry data, 5% of the overall test contributes towards the smoke, which sounds like minimal effort. Correct, it is the beginning of automation success.

For India/global traffic, UI behavior can vary due to region-based cookie banners, payment options, and A/B experiments. Validate smoke/sanity candidates in the same CI region and browser setup your users use most.

Can developers use my automated smoke tests?

If the smoke tests are successful and used on daily tests inside test environments and can be as well promoted for use with developers.

Why not? Shift Left.

What next?

Then, move on to sanity tests — most of the business-critical tests that need to be automated to ensure the business cases do not fail in the production environment. It may also be a good idea to automate them for multiple test data. Per industry data, roughly 15% of the overall test contributes toward sanity, which sounds like a decent effort. With smoke and mind, you reach the Pareto rule (approximately 80% of the effects come from 20% of the causes).

Make sense?

Sanity tests are developed, and what then?

You may be tempted to go next. But stay there for a while by executing sanity tests against different browsers, platforms, and different data to confirm the automation stability.

Great idea?

If all goes well, then what?

If all goes well, your automation is working great for you now. Time to step up! Go for the minimal regression suite and maybe new functionalities if you are part of in-sprint automation.

What and how much to automate?

In sum, start automating the minimalism — the smoke and sanity and then grow your automation suite consistently. Remember, the tree does not bear fruit overnight, and neither success happens over a day. So, start small and stay consistent with your test automation.

What makes a test a good automation candidate?

-

High frequency (run every build/PR/nightly)

-

High business impact (revenue, login, checkout)

-

Deterministic (not dependent on volatile UI/3rd-party timing)

-

Clear assertions (pass/fail is objective)

-

Stable test data available

-

CI-friendly (headless, reliable waits, good locators)

Would you like to know more about software testing course , learn from Testleaf.

FAQs

What are the best test cases to automate first?

Start with smoke tests that validate the build and cover the most critical user journeys.

Which test cases are NOT good candidates for automation?

Tests with unstable UI, unclear expected results, unreliable test data, or frequent product changes without stable locators.

Should we automate smoke or regression first?

Smoke first. Prove stability and ROI, then expand into sanity and minimal regression.

How do I calculate ROI for automating a test case?

Compare manual run time vs automation build + maintenance time. Automate only if it runs often enough to break even.

Why do automated tests fail more in CI than locally?

CI is slower and different (browser versions, screen size, network). Add explicit waits, stable data, and failure artifacts.

How many test cases should be automated initially?

Keep it small: a stable smoke set first (often a small % of suite), then grow after stability.

We Also Provide Training In:

- Advanced Selenium Training

- Playwright Training

- Gen AI Training

- AWS Training

- REST API Training

- Full Stack Training

- Appium Training

- DevOps Training

- JMeter Performance Training

Author’s Bio:

As CEO of TestLeaf, I’m dedicated to transforming software testing by empowering individuals with real-world skills and advanced technology. With 24+ years in software engineering, I lead our mission to shape local talent into global software professionals. Join us in redefining the future of test engineering and making a lasting impact in the tech world.

Babu Manickam